Introduction

Artificial intelligence has evolved from a futuristic concept to a tangible, transformative technology in every industry. Among its most fascinating branches is generative AI, a field that allows machines to create content — from images and music to text and videos — autonomously. Leading this innovation is Stability AI, a company that has made waves with its open-source initiatives and cutting-edge AI models Stability AI.

In 2025, the landscape of generative AI is more vibrant than ever, thanks to both open-source generative AI models and Google’s generative AI tools. These technologies aren’t just academic experiments; they are reshaping industries, enhancing creative workflows, and democratizing access to AI capabilities. In this blog, we explore 7 powerful generative AI models and tools that are revolutionizing Stability AI and the broader AI ecosystem Stability AI.

1. Stable Diffusion – The Flagship Open Source Model

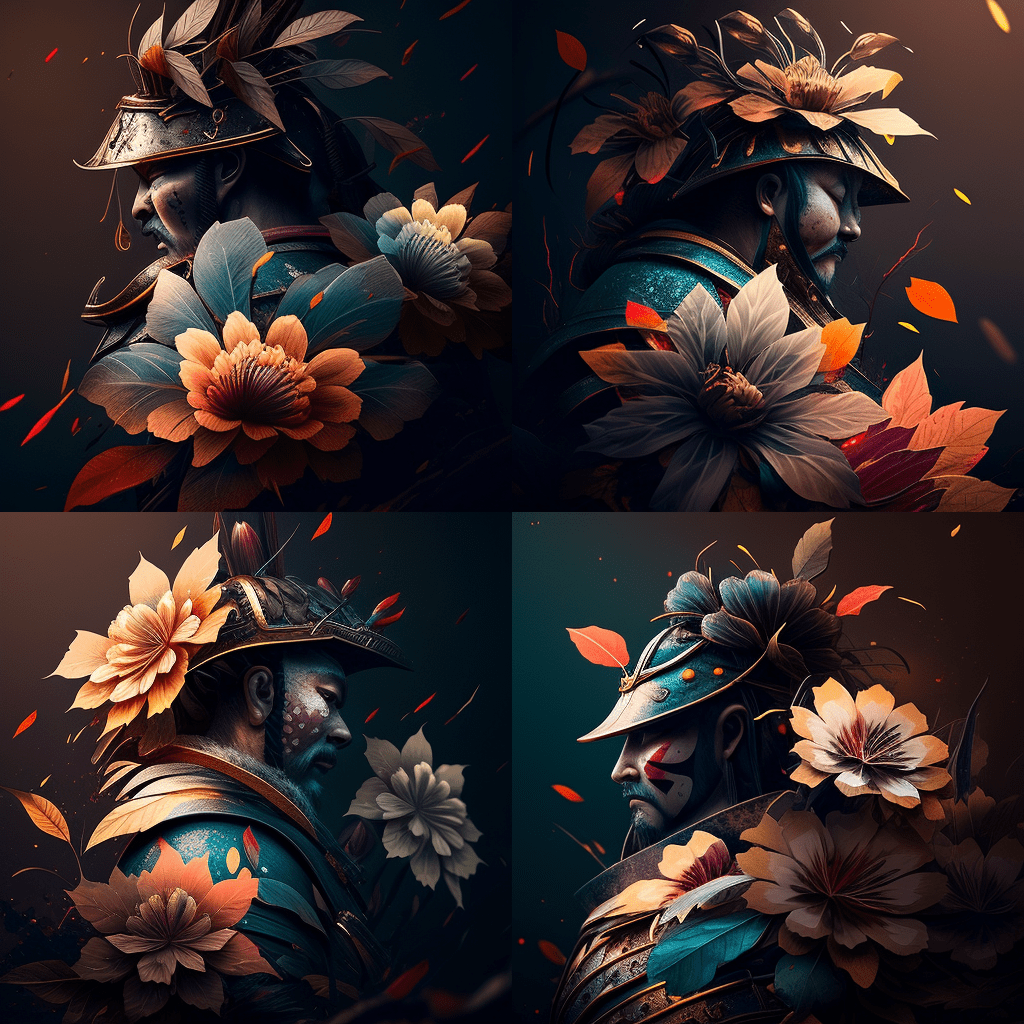

Stable Diffusion, developed by Stability AI, stands as one of the most influential open-source generative AI models in the world of image creation. Since its release, it has transformed how artists, developers, and businesses approach visual content generation. By enabling high-quality image creation from simple text prompts, Stable Diffusion has democratized access to advanced AI image generation, making powerful creative tools available to a global audience.

At its core, Stable Diffusion specializes in text-to-image synthesis, allowing users to describe an idea in natural language and instantly receive a corresponding visual output. This breakthrough has reshaped digital creativity by removing traditional barriers such as artistic skill, expensive software, or specialized hardware…..https://stability.ai/news/stable-diffusion-public-rel

Understanding Text-to-Image Generation

Text-to-image generation is the foundation of Stable Diffusion’s success. Users provide a written prompt describing a scene, object, style, or mood, and the model interprets this input to generate an image that aligns with the description. The system understands composition, color, lighting, and artistic styles, enabling it to create images ranging from realistic photographs to abstract art.

What makes Stable Diffusion particularly impressive is its ability to generate detailed and coherent images even from complex prompts. Users can specify styles, environments, emotions, and artistic influences, giving them fine-grained control over the final output.

High-Quality Visual Output

Stable Diffusion is widely recognized for producing high-resolution, visually appealing images. It captures intricate details, realistic textures, and stylistic nuances that rival or surpass many proprietary AI models. This level of quality has made it a popular choice for professional designers, game developers, filmmakers, and marketers Stability AI.

The model’s consistent output quality allows it to be used in real-world commercial applications such as concept art, product visualization, social media graphics, and advertising creatives Stability AI.

Customizability and Fine-Tuning

One of the defining features of Stable Diffusion is its extensive customizability. Unlike closed-source AI tools, Stable Diffusion allows users to fine-tune the model for specific artistic styles, themes, or subjects. Developers and artists can train the model on custom datasets to create unique visual outputs tailored to their needs Stability AI.

This flexibility has given rise to specialized versions of Stable Diffusion focused on anime art, architectural visualization, fashion design, medical imaging concepts, and more. The ability to adapt the model makes it a powerful tool for niche creative industries.

Open-Source Accessibility and Community Innovation

Stable Diffusion’s open-source nature is one of its greatest strengths. Anyone can access the model’s code, modify it, and build upon it. This openness has encouraged rapid innovation and collaboration across the global AI community.

Developers have created countless extensions, interfaces, and tools that enhance usability, such as web-based image generators, desktop applications, and plugins for creative software. Artists and researchers continuously experiment with new techniques, pushing the boundaries of what generative AI can achieve.

This community-driven development has accelerated improvements in performance, efficiency, and creative control at a pace rarely seen in closed-source systems.

Impact on Stability AI

For Stability AI, Stable Diffusion has become the foundation of its identity and mission. The model has positioned the company as a leader in open and accessible generative AI technologies. By prioritizing openness over exclusivity, Stability AI has set a benchmark for ethical and inclusive AI development.

Stable Diffusion’s success has also helped Stability AI build partnerships, attract developers, and expand into related AI tools for video, audio, and 3D content generation. The model’s popularity has validated the company’s belief that open-source innovation can compete with—and even outperform—proprietary solutions.

Empowering Artists and Creators

Stable Diffusion has fundamentally changed the creative landscape. Artists no longer need advanced technical skills to bring their ideas to life. Writers, marketers, and entrepreneurs can generate visuals that support storytelling, branding, and communication with minimal effort.

Rather than replacing human creativity, Stable Diffusion acts as a creative amplifier, allowing users to explore ideas faster and iterate more freely. This has opened new opportunities for independent creators and small businesses that previously lacked access to professional design resources.

Applications Across Industries

The versatility of Stable Diffusion extends across multiple industries, including:

- Digital art and illustration

- Gaming and entertainment

- Marketing and advertising

- Education and research

- Product design and prototyping

- Social media content creation

Its ability to adapt to different styles and requirements makes it a universal tool for visual innovation.

Challenges and Ethical Considerations

While Stable Diffusion offers immense creative power, it also raises important ethical questions around copyright, misuse, and responsible AI use. Stability AI and the open-source community continue to address these concerns by developing guidelines, safeguards, and transparency measures.

Responsible use remains a shared responsibility between developers, users, and organizations adopting the technology.

Conclusion

Stable Diffusion is more than just an AI image generator—it is a landmark achievement in open-source generative AI. By combining high-quality text-to-image generation, deep customization, and open accessibility, it has reshaped how visuals are created and shared.

As the flagship model of Stability AI, Stable Diffusion continues to inspire innovation, empower creators, and set new standards for what open-source AI can achieve. Its impact on the generative AI ecosystem is profound, and its influence will continue to grow as the technology evolves.

2. Google Imagen – Advanced Text-to-Image Generation

While open-source models like Stable Diffusion have transformed accessibility in generative AI, Google Imagen represents a different but equally powerful approach. Developed by Google, Imagen is a proprietary text-to-image generation model designed to deliver exceptionally photorealistic visuals. By combining advanced AI architectures with Google’s massive computational infrastructure, Imagen sets a high standard for image quality and contextual understanding.

Imagen is not just about creating images—it focuses on producing visuals that closely resemble real-world photography, making it especially appealing for professional and enterprise use cases.

What Makes Google Imagen Unique

Google Imagen stands out due to its strong emphasis on visual fidelity and realism. The model is trained on large-scale datasets and leverages sophisticated diffusion techniques to interpret textual prompts with remarkable accuracy. Even short or simple prompts can result in highly detailed images with realistic lighting, textures, and composition.

This capability allows Imagen to generate images that feel natural and believable rather than stylized or abstract. As a result, it is often preferred for applications where realism is critical.

High-Fidelity Visual Output

One of Imagen’s most notable features is its ability to produce high-fidelity visuals. The model excels at rendering fine details such as shadows, reflections, skin tones, and environmental depth. Objects appear proportionate, faces look natural, and scenes maintain consistent perspective.

This level of realism is particularly valuable for industries like advertising, product visualization, architecture, and media production, where visual accuracy directly impacts credibility and audience engagement.

Deep Contextual Understanding

Google Imagen goes beyond basic text interpretation by demonstrating a strong understanding of context and nuance. It can accurately capture subtle details in prompts, such as emotions, relationships between objects, or specific stylistic cues.

For example, Imagen can differentiate between similar prompts and adjust outputs accordingly, ensuring that the generated image aligns closely with the user’s intent. This reduces the need for repeated prompt refinement and improves overall efficiency.

Seamless Integration with Google Cloud

Another major advantage of Google Imagen is its integration with Google Cloud. Businesses and developers can access Imagen through APIs, making it easier to incorporate advanced image generation into existing workflows and applications.

This cloud-based approach ensures scalability, reliability, and performance. Organizations can generate images on demand without managing complex infrastructure or hardware requirements. For enterprises, this makes Imagen a practical solution for large-scale deployment.

Enterprise-Ready AI for Businesses

Google Imagen is designed with enterprise use in mind. Its proprietary nature allows Google to implement strict quality controls, safety measures, and usage policies. This makes it a reliable choice for businesses that require consistent output, data security, and compliance.

Companies can use Imagen for marketing campaigns, digital content creation, branding, and internal visualization tools while benefiting from Google’s robust AI governance framework.

Comparison with Open-Source Models

While open-source models like Stable Diffusion offer flexibility and customization, Google Imagen prioritizes quality, stability, and ease of use. Users do not need to fine-tune models or manage training datasets. Instead, they receive consistently high-quality results through a managed platform.

Many creators and organizations use Imagen as a complement to open-source tools, choosing the best model based on the task. Open-source solutions excel in experimentation and customization, while Imagen shines in professional-grade image generation.

The Role of Computational Power

A key factor behind Imagen’s performance is Google’s vast computational resources. The model benefits from powerful hardware, optimized infrastructure, and continuous improvements driven by Google’s AI research teams.

This computational advantage allows Imagen to handle complex prompts and produce refined results quickly, reinforcing its reputation as a premium generative AI solution.

Ethical Considerations and Responsible AI

Google has placed strong emphasis on responsible AI development with Imagen. The model includes safeguards to reduce misuse and prevent the generation of harmful or misleading content. These measures reflect Google’s broader commitment to ethical AI practices.

For businesses and creators, this focus on responsibility provides additional confidence when adopting Imagen for real-world applications.

Significance in the Generative AI Landscape

Google Imagen represents the power of combining advanced algorithms with large-scale infrastructure. It demonstrates how proprietary models can push the boundaries of visual realism while maintaining reliability and safety.

For creators, developers, and companies, Imagen offers a compelling alternative or complement to open-source image generation models. Its photorealistic output, contextual understanding, and cloud-based accessibility make it a valuable tool in the evolving generative AI ecosystem.

Conclusion

Google Imagen is a leading example of advanced text-to-image generation, delivering photorealistic visuals through cutting-edge AI and powerful cloud infrastructure. While it differs from open-source models in terms of accessibility and customization, its strengths in quality, realism, and enterprise readiness make it a standout solution.

As generative AI continues to evolve, Google Imagen plays a crucial role in shaping the future of visual content creation, offering creators and businesses a reliable and high-performance image generation platform.

3. DALL·E 3 – OpenAI’s Generative Art Powerhouse

DALL·E 3, part of the OpenAI suite, is another transformative generative AI tool that has influenced the Stability AI ecosystem indirectly. Its advanced text-to-image capabilities make it a reference point for open-source developers striving to match high-quality outputs.

Key Highlights

- Creative flexibility: Can generate highly imaginative visuals that blend concepts seamlessly.

- Interactive prompting: Users can refine outputs iteratively.

- Cross-industry applications: From marketing visuals to educational illustrations.

This model showcases how generative AI is no longer limited to technical experts; it empowers anyone with a creative idea.

4. Runway Gen-2 – Video Generation Breakthrough

While most generative AI focuses on images, Runway Gen-2 brings the innovation to video. It allows creators to generate short video clips from text prompts — a leap beyond static imagery.

Why It Matters

- Expands creative possibilities: Filmmakers, marketers, and educators can produce professional-quality videos without traditional resources.

- Integration with Stability AI workflows: Users can combine image generation and video production for end-to-end content creation.

- Open-source derivatives: Some components of Runway’s technology have inspired open-source initiatives in the Stability AI community.

The model exemplifies how generative AI is moving toward multimodal content creation, blending images, video, and even audio.

5. LLaMA 3 – Large Language Models Empowering Creativity

LLaMA 3, developed by Meta, is a state-of-the-art large language model (LLM) designed for text generation. While not strictly visual, its capabilities integrate seamlessly with generative AI workflows.

Applications

- Content generation: Automated blog writing, story creation, and ad copy generation.

- AI-driven storytelling: Paired with image-generation models, it can create entire narratives with illustrations.

- Open-source flexibility: Researchers can fine-tune it for domain-specific knowledge.

Impact on Stability AI: By combining LLaMA 3 with image models like Stable Diffusion, creators can produce fully integrated multimedia content, expanding the reach of generative AI beyond visuals.

6. MidJourney – Artistic AI with a Creative Edge

MidJourney focuses on producing highly stylized, artistic images. Unlike Stable Diffusion’s flexibility, MidJourney emphasizes artistic creativity, appealing to designers and digital artists.

Key Features

- Customizable artistic styles: Generate images that mimic famous art movements or create unique aesthetics.

- Community-driven improvements: The platform encourages collaboration and idea-sharing.

- Cross-platform integration: Works alongside Stability AI models for diverse creative workflows.

Significance

MidJourney proves that generative AI isn’t just about realism — it’s also about imagination and style, complementing Stability AI’s open-source initiatives with professional-grade artistry.

7. Google Generative AI Tools – AI-Powered Ecosystem

Google has invested heavily in generative AI beyond Imagen, offering tools for text, images, and audio generation. These proprietary tools accelerate adoption across industries, particularly when integrated into Google Workspace and cloud services.

Key Highlights

- Text-to-text generation: Summarization, paraphrasing, and AI-assisted content writing.

- Image and audio synthesis: Multi-modal content creation for businesses and creatives.

- Accessibility via APIs: Developers can integrate these tools into apps or workflows.

Impact

Google Generative AI tools demonstrate how enterprise-level support can complement open-source initiatives like Stability AI, providing hybrid solutions for both creative individuals and large organizations.

How Stability AI Is Revolutionized by These Models

The combination of open-source generative AI models and Google’s proprietary tools creates a powerful synergy:

- Enhanced creativity: Artists and developers can leverage multiple AI models to produce richer, more nuanced content.

- Democratization of AI: Open-source models allow anyone to experiment, while Google tools provide enterprise-grade reliability.

- Cross-industry applications: From advertising and gaming to healthcare and education, AI is integrated everywhere.

- Rapid innovation: Open collaboration between Stability AI, developers, and global AI platforms accelerates technological progress.

By embracing both open-source and commercial AI models, Stability AI is positioning itself as a central hub in the generative AI revolution of 2025.

Future Trends in Generative AI

1. Multimodal AI Integration

AI systems will increasingly combine text, image, audio, and video, enabling fully immersive content creation.

2. Real-Time Content Generation

Expect instantaneous AI-generated media, useful for live streaming, interactive media, and gaming.

3. Ethical and Responsible AI

Open-source initiatives allow transparency, helping to mitigate biases and ensure AI tools are used responsibly.

4. Cloud and Edge AI

Hybrid solutions using cloud resources and on-device processing will make AI faster, more accessible, and more privacy-conscious.

Conclusion

The rise of open-source generative AI models and Google generative AI tools is transforming Stability AI and the broader creative ecosystem. These seven models and tools exemplify how AI is no longer confined to labs — it’s empowering anyone to create, innovate, and redefine what’s possible.

Whether you are an artist, a developer, a business leader, or a tech enthusiast, 2025 marks the year where generative AI is not just a tool but a partner in creativity and innovation. Stability AI stands at the forefront, blending openness, accessibility, and technical excellence to revolutionize how we generate, experience, and interact with digital content…..Blogs