Introduction

Artificial Intelligence (AI) has shifted from being a futuristic concept to becoming an essential part of daily life, powering everything from smart assistants to medical diagnostics.

Two of the most impactful areas in this domain are Explainable Artificial Intelligence (XAI) and Natural Language Processing (NLP). Both fields are at the heart of the current AI revolution.

Yet, as AI systems grow more powerful, the call for openness, transparency, and accessibility has never been louder.

This blog, Open Source AI Hub: Explainable AI & NLP Insights, aims to explore how open source initiatives are shaping the future of AI, why explainability is crucial, and how NLP innovations are transforming human–machine communication and more know to https://www.digitalocean.com/resources/articles/open-source-ai-platforms

The Rise of Open Source AI

Open source software has long been a catalyst for innovation. Projects like Linux, Apache, and TensorFlow have shown that collaboration accelerates technological progress. In AI, open source is even more powerful because:

Democratization of Technology

Open source AI makes advanced tools available to everyone, not just big companies with large budgets.

Students can learn and experiment without worrying about costs.

Researchers gain access to the same cutting-edge models as industry leaders.

Startups can innovate quickly without paying for expensive software.

This levels the playing field in technology.

As a result, more people can contribute to AI progress.

Rapid Innovation

In open source communities, developers from all corners of the world actively collaborate and contribute.

They freely share models, datasets, and fresh ideas without barriers, making innovation more accessible.

Whenever a mistake or bug is discovered, it is quickly identified and fixed by community members.

This teamwork ensures that open source AI projects remain reliable and continuously updated.

New features, tools, and improvements are introduced far more quickly than in closed, proprietary projects.

The open exchange of knowledge encourages experimentation and creative problem-solving.

Developers can learn from one another, building on shared successes and avoiding repeated mistakes.

As a result, the pace of AI development accelerates beyond what a single organization could achieve.

The community-driven approach allows even small contributors to make a big impact.

Ultimately, everyone benefits from this rapid cycle of progress and collective intelligence.

Transparency

Open source AI makes the code and methods visible to all.

Anyone can look at how the system works inside.

Researchers can test and verify if the AI is reliable.

Flaws or biases are easier to identify and correct.

This builds trust between users and the technology Open source AI.

Transparency ensures AI is not a “black box.”decodeai

Global Collaboration

People from many countries and backgrounds can join open source projects.

Different Open source AI perspectives help solve problems more creatively.

Cultural and language diversity improves AI models for global use.

Challenges like bias, fairness, or efficiency are tackled together.

This worldwide teamwork makes AI more inclusive.

Collaboration leads to better and stronger solutions.

Explainable AI (XAI): Why Transparency Matters

Natural Language Processing (NLP) is a key branch of AI that focuses on bridging the gap between humans and machines through language.

It allows computers to understand, interpret, and even generate human language in a meaningful way.

From smart chatbots to virtual voice assistants like Alexa and Siri, NLP powers everyday tools we rely on Open source AI.

Machine translation tools, such as Google Translate, also depend on NLP to break down language barriers.

Sentiment analysis uses NLP to help businesses understand customer feelings through reviews and social media in Open source AI.

By analyzing patterns in text and speech, NLP creates more natural human–AI communication.

Open source AI frameworks make these tools widely available, enabling faster progress in the field.

Developers and researchers worldwide contribute to improving NLP models, making them smarter and more accurate.

As a result, NLP is transforming how humans interact with technology on a global scale.

Key Reasons for Explainability

- Trust and Accountability

People need to trust AI before they use it in real life.

Professionals, like doctors, cannot accept results blindly.

Explanations show how the AI reached its decision.

This builds confidence in sensitive areas like healthcare.

Accountability helps track mistakes and fix them.

Trust makes users more willing to adopt AI.

Ethics and Fairness

AI can learn hidden bias from training data.

Biased data can create unfair or harmful results.

Explainable AI helps uncover these hidden issues.

Developers can correct the bias once it is exposed.

This ensures fairer and more balanced outcomes Open source AI.

Ethical AI benefits everyone, not just certain groups Open source AI .

Legal Compliance

Many countries require AI systems to be transparent.

GDPR gives people the “right to explanation.”

AI cannot make big decisions without showing reasons.

Companies must prove how their AI models work.

Explainability helps avoid legal risks and penalties.

Compliance improves public trust in AI systems Open source AI.

Improved Debugging

AI systems sometimes fail in unclear ways.

Wrong results are hard to fix without explanations.

Explainability shows what influenced a model’s output.

This makes errors easier to detect and solve.

Developers can improve models faster and more reliably.

Clear debugging leads to stronger AI performance Open source AI.

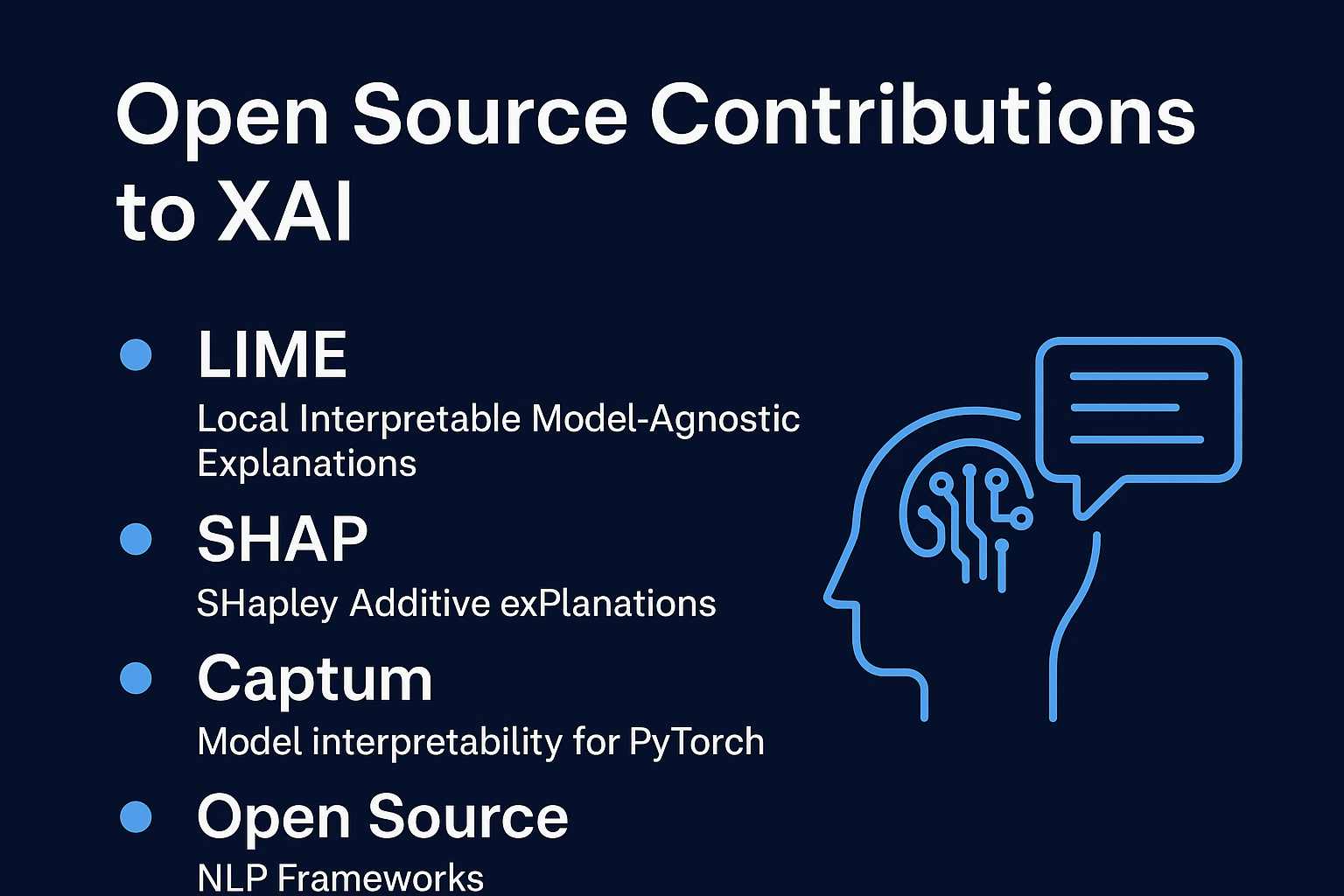

Open Source Contributions to XAI

Open source tools like LIME, SHAP, and Captum are transforming how researchers understand AI models.

These frameworks provide visualizations that reveal why a model makes certain predictions or decisions.

With SHAP, for example, each feature in a dataset is given a contribution score, making results easier to interpret.

Captum integrates directly with PyTorch, allowing developers to test and embed explainability into their workflows.

Because these tools are freely available, researchers and developers everywhere can access them without barriers.

This openness promotes transparency, accountability, and responsible AI adoption across industries.

In short, open source AI empowers the global community to make machine learning more interpretable and trustworthy.

Natural Language Processing (NLP): Giving Machines a Voice

Natural Language Processing (NLP) is a key branch of AI that focuses on bridging the gap between humans and machines through language.

It allows computers to understand, interpret, and even generate human language in a meaningful way.

From smart chatbots to virtual voice assistants like Alexa and Siri, NLP powers everyday tools we rely on.

Machine translation tools, such as Google Translate, also depend on NLP to break down language barriers.

Sentiment analysis uses NLP to help businesses understand customer feelings through reviews and social media.

By analyzing patterns in text and speech, NLP creates more natural human–AI communication.

Open source AI frameworks make these tools widely available, enabling faster progress in the field.

Developers and researchers worldwide contribute to improving NLP models, making them smarter and more accurate.

As a result, NLP is transforming how humans interact with technology on a global scale.

.

Key Applications of NLP

- Conversational AI

Conversational AI powers chatbots and voice assistants like Alexa, Siri, and Google Assistant.

These systems use NLP to understand spoken or written language.

They convert user queries into machine-readable instructions.

The AI then provides useful answers, actions, or recommendations.

Businesses use conversational AI for customer support and automation.

It reduces response time while improving user experience.

As NLP improves, conversations with AI feel more natural and human-like.

Machine Translation

Machine translation allows text to be converted from one language to another.

Open source tools like MarianMT and OpenNMT make this widely accessible Open source AI.

These systems support dozens of global languages.

They are used in apps, websites, and communication platforms.

Translation helps break down barriers between cultures and communities.

Businesses use it to reach international customers.

Continuous improvements make translations more accurate and context-aware Open source AI.

Sentiment Analysis

Sentiment analysis is the study of emotions and opinions in text.

Companies use it to analyze tweets, reviews, and social media posts.

It helps brands understand customer satisfaction or frustration.

Positive, negative, or neutral tones are identified automatically.

This guides businesses in improving products and services.

Governments and researchers use it to study public opinion.

Sentiment analysis offers real-time feedback at a large scale.

Document Summarization

Summarization tools shorten long documents into clear summaries Open source AI.

NLP models identify the most important parts of text.

They remove unnecessary details while keeping the key meaning.

Businesses use this to process reports, legal papers, and research.

It saves time by making huge data sets more digestible.

Automated summaries improve productivity for professionals.

This is especially useful in education, law, and journalism.

Search and Information Retrieval

Search engines rely heavily on NLP to understand queries.

They go beyond keywords to capture user intent.

NLP helps match searches with the most relevant information.

This improves accuracy and quality of search results.

Recommendation systems also use NLP for personalization.

From Google to e-commerce sites, NLP powers fast information access.

Better NLP means smarter, more user-friendly search experiences Open source AI.

Open Source NLP Ecosystem

The most influential NLP breakthroughs in recent years have come from open source projects. Hugging Face’s Transformers library provides access to pre-trained models like BERT, GPT, and RoBERTa, which are widely used in academia and industry.

Similarly, spaCy offers efficient NLP pipelines for tasks like named entity recognition and dependency parsing, while NLTK (Natural Language Toolkit) remains a popular educational resource for learning NLP basics.

Open source NLP is not just about tools; it’s about building inclusive AI that understands multiple languages and cultural contexts. For example, community-driven projects are training models on underrepresented languages to ensure that AI benefits everyone, not just speakers of English or Mandarin.

The Intersection of XAI and NLP

When combined, XAI and NLP create powerful, trustworthy systems. For example:

- Explainable Chatbots

Chatbots today often provide answers without showing how they were generated.

With XAI, a chatbot could explain why it chose a particular response.

This builds greater trust between users and the AI system.

Explanations also help users learn how to ask better questions.

Businesses can use explainable chatbots to provide more transparent support.

Such chatbots improve customer satisfaction by reducing confusion.

Over time, this makes human–AI conversations feel more reliable and safe.

Transparent Sentiment Analysis

Companies rely on sentiment analysis to measure customer feelings.

Traditional models show results (positive, negative, neutral) but not reasons.

XAI can highlight which words or phrases influenced the classification.

This helps businesses understand customer opinions in more depth Open source AI.

It also makes it easier to spot errors in the analysis.

Transparency allows companies to act on insights with confidence.

Clear sentiment explanations lead to better business decisions.

Legal and Healthcare AI

Law and healthcare are fields where AI decisions carry serious weight.

Professionals cannot trust results unless they understand the reasoning.

XAI ensures that AI systems highlight key evidence or factors.

For example, a medical system might show which symptoms shaped its diagnosis.

In legal cases, AI could explain which documents supported its conclusions.

This gives professionals confidence in making final decisions.

Explainable AI makes NLP safer to use in sensitive, high-stakes areas.

Imagine a medical NLP model that suggests a diagnosis but also highlights which patient symptoms and lab results influenced the outcome. Such transparency would make AI a true partner rather than a mysterious tool Open source AI.

Challenges and Future Directions

While open source AI, XAI, and NLP are progressing rapidly, several challenges remain:

- Bias and Fairness

AI systems learn patterns directly from the data they are trained on.

If the training data contains bias, the model may repeat those biases.

Even with explainability tools, unfair decisions can still occur.

For example, biased hiring data could lead to unfair job screening.

Ensuring fairness requires constant monitoring and retraining of models.

Diverse datasets help reduce bias and make systems more inclusive.

Without fairness, AI risks reinforcing social inequalities.

Scalability

Modern AI models, like GPT-4 and LLaMA, are extremely large.

Explaining their decisions requires huge amounts of computing power.

Not all organizations can afford such high resources Open source AI.

This creates a gap between large companies and smaller groups.

Researchers are exploring ways to simplify interpretability for big models.

Scalable explainability is key to making AI accessible to everyone.

Without it, transparency may remain limited to a few players.

Standardization

The AI field lacks a single framework for measuring explainability.

Different tools use different methods to provide explanations.

This makes results difficult to compare across systems.

A universal standard would bring clarity and consistency.

It would also help regulators evaluate AI systems fairly.

Researchers are pushing for common guidelines and benchmarks.

Standardization ensures explainability is meaningful, not just a buzzword.

Privacy Concerns

NLP models often train on sensitive personal or business data.

Transparency tools may reveal details that compromise privacy.

For example, explanations could accidentally expose user information.

Balancing explainability with data protection is a big challenge.

Privacy laws like GDPR require careful handling of personal text.

Secure methods such as anonymization are needed during training.

Strong privacy practices are essential for responsible AI us

Future Opportunities

- Hybrid Models

Traditional symbolic AI uses clear, rule-based logic.

Deep learning, on the other hand, is powerful but often seen as a “black box.”

By combining both approaches, we can create AI that is accurate yet easier to understand.

Symbolic rules make the reasoning process more transparent.

Deep learning provides flexibility and the ability to handle complex data.

Together, hybrid models balance performance with explainability.

This makes AI systems more reliable and trustworthy in critical fields.

Multilingual Explainability

Natural Language Processing (NLP) is growing beyond English into many world languages.

Models trained on different languages need equally clear explanations.

Biases and errors in non-English data must also be detected.

Explainability tools must adapt to cultural and linguistic diversity.

This ensures fair and transparent AI for global users Open source AI.

Without multilingual explainability, some groups may be left behind.

Building inclusive AI means explanations must work across all languages.

Open Source Governance

Open source projects thrive on community contributions and collaboration.

However, without guidelines, AI can be misused or developed irresponsibly.

Governance helps set ethical standards for AI development.

Communities may define rules for fairness, privacy, and accountability.

Shared guidelines ensure AI benefits are distributed equally.

Governance builds trust among developers, users, and policymakers.

Strong open source governance keeps AI safe and responsible for all.

Why Open Source Matters More Than Ever

The synergy between open source, XAI, and NLP highlights a powerful vision for the future of AI.

By keeping tools, datasets, and frameworks freely available, the community ensures development stays transparent and fair in open source AI .

Open access prevents innovation from being locked behind paywalls or restricted to wealthy corporations.

While large companies do benefit from open source, the real power lies in its accessibility to everyone.

Students, educators, and independent researchers can experiment, test ideas, and build new solutions without heavy costs.

This inclusivity fosters creativity and sparks innovation from diverse perspectives across the globe.

Open source also encourages accountability, reducing the risks of biased or unethical AI systems.

Most importantly, it guarantees AI grows as a shared human achievement, not as a monopoly of a few.

In this way, open source protects the future of AI as a truly collaborative effort.

Conclusion

Explainable AI and Natural Language Processing are not just technical fields; they are movements toward responsible, inclusive, and human-centered AI. Open source plays a pivotal role in accelerating research, enabling collaboration, and fostering trust in AI systems.

At Open Source AI Hub, our mission is to provide clear insights into these domains, helping developers, researchers, and curious minds understand how transparency and language intelligence are reshaping technology.

The future of AI lies not in secrecy but in collaboration, and by embracing open source XAI and NLP, we move closer to a world where AI is powerful, understandable, and beneficial for all.

Hey, I am Adarsh Tiwari from Microsoft I am very happy to see you to make this types of content and I offer you to join our company without any exams and I offer you a starting package of :- 5 crores if you will contact us then I complete your registration process so thanks Adarsh to see my feedback .

RAM RAM MITTAR⛳️

TAGDE HONE PE DHYAN DO 💪

Pingback: “Future of AI in Education: 8 Essential Ethics and Solutions You Must Know” -